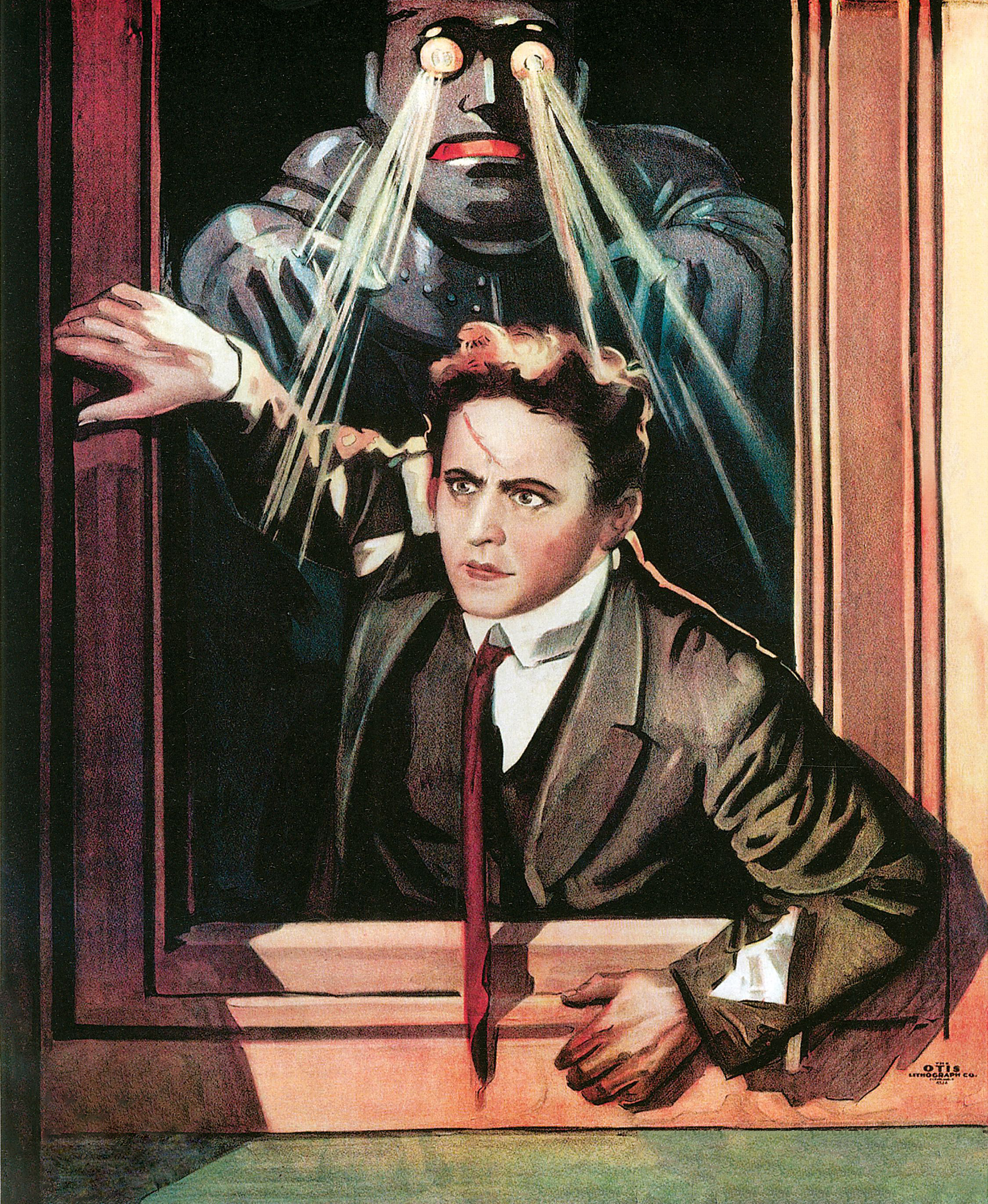

If Robots Kill, Who is to Blame?

Killer Robots, Then and Now

As you read this, the world’s militaries are feverishly competing to develop killer robots. We have long had ‘autonomous’ weapons which acted on their own. Landmines that trigger when stepped on are autonomous in a sense.

They trigger automatically.

In the Cold War, nations developed so-called ‘Dead Hand’ mechanisms which could trigger a nuclear response to attacks even in the absence of a human command. Autonomous weapons share the ability to operate on their own without direct human control. Yet not all forms of autonomy are the same. The weapons of the near future shall have programs with evolving lines of code.

Today, new and shuddersome technologies are on the horizon. Drone swarms are among the nightmarish next steps in the development of weapons which can ‘pull the trigger’ themselves. The coming weapons will little resemble those of yesteryear. Rather than responding to stimuli like a footstep as landmines do, AI and machine learning will make arms that reason, evolve, and grow. They will be able to learn from new experiences and make decisions based on old ones. The autonomous weapons of the 21st century will weigh options, discern combatants from non-combatants, calculate risk, and do so differently over time. These novel arms are defined by their ability to innovate and evolve, eventually making decisions our programmers and commanders did not foresee.

What’s So Bad about Autonomous Weapons?

The thought of increasingly autonomous weapons poses a philosophical challenge. If a robot is truly autonomous, and it commits a crime, who is to blame? Some are deeply concerned that autonomous weapons in the near future may be so autonomous that it won’t be possible to blame the commanders or the programmers of the weapons. For philosophers of war however, having someone to hold accountable for atrocities is critical. In other words, if there is a war crime, there must be a war criminal.

In the words of the ‘Stop Killer Robots’ campaign, an “accountability gap would make it difficult to ensure justice, especially for victims.”

Today, there are many campaigns working to outlaw these new lethal autonomous weapons before they arrive. Such campaigns have had their message echoed by notable figures like AI legend Stuart Russell, the Bulletin of Atomic Scientists, U.N. Secretary General Antonio Guterres, and the Holy See (the Pope). Should autonomous weapons be this controversial? To find out, we will borrow some arguments from a now famous paper Killer Robots by philosopher Robert Sparrow of Monash University. Sparrow explores robots and responsibility by comparing autonomous weapons to child soldiers.

Child Soldiers and Responsibility

Why should we prohibit the ‘use of children in hostilities’? Beyond the obvious harm to children and infringements on their rights, there is another ethically troubling facet to the use of child soldiers - responsibility. Imagine that a group of armed children are sent by a commander to attack an enemy position. However, for some reason, they fire on and kill civilians. A war crime has been committed. Who is responsible?

It is not immediately clear who would be responsible when child soldiers kill innocents. If a child is too young to consent to fight, it follows that they are too young to be held accountable for their conduct in combat. You might think that the people who conscripted kids and sent them to fight are responsible. While they are undoubtedly responsible for exposing children to danger, even liable for the harm they cause, it’s not clear that they are responsible for the crimes children commit.

Children are able to make decisions, and are thus autonomous in this sense. However, they are typically only responsible for their actions in a causal sense, not an ethical sense. Even though they caused something to happen, we do not consider them fully ‘responsible’ ethically or legally as an adult would be.

Now, instead of children at war, imagine a commander sending robots. Imagine autonomous machines going out and killing innocent people. Their decisions are products of AI and machine learning. Who is responsible in this case?

Robert Sparrow argued in Killer Robots that neither children nor robots can be regarded as appropriate targets for responsibility, let alone punishment. In fact, he argued that no one can be held adequately responsible for war crimes committed in these cases.

Who is to Blame?

A common response is to insist that the commander who sends robots to fight can and should be held responsible. Yet these robots are autonomous. They are making decisions, according to their own logic, independently. If a commander sends soldiers to fight, ordering them to engage only the enemy, but they instead fire on non-combatants, we can attribute responsibility fairly easily. In that case, the soldiers are guilty for what they did. If a commander sends robots to fight, ordering them to engage only the enemy, but for some reason they decide to attack civilians, can we as easily attribute responsibility?

Sparrow sees a dilemma. Robots, like children, that can independently make decisions on the battlefield are autonomous. If a commander is held responsible for the misdeeds of a robot, it implies that the robot was not in fact autonomous. If someone is held responsible for the decisions of someone or something else that is truly autonomous, it would be unjust.

If a robot really is making its own decisions, they are its own decisions and not the commander’s. Thus we can’t hold a commander responsible for a robot’s decisions.

One might insist that a commander who sends children or robots to fight, is still guilty of something. Probably so. Commanders can be held guilty for the crimes committed by their subordinates. Legally today, the concept is known as command responsibility. Commanders have been held responsible for the crimes of their subordinates in the past. Typically though, command responsibility only applies if a commander ordered soldiers to act criminally, or if the commander knew about it and did nothing. If a commander is not aware of, has not ordered, and could not have feasibly foreseen or prevented an atrocity, it is a hard case to make that the commander is still responsible.

If not the commander, then who? Surely someone. Another usual suspect is the programmer. If a robot is programmed, then the criminal decisions its programming produces might be attributable to those who wrote the code. This logic might apply to the designers of landmines and smart bombs. Yet the autonomous weapons of tomorrow will be capable of learning and changing themselves. What the code the programmer writes may not be the code that commits atrocities later on.

So, Should we Ban Killer Robots?

For Sparrow, autonomous weapons create a problem, making it difficult for us to attribute responsibility in the case of war-time atrocities. None of the likely suspects are satisfactory, he argues. Like children, robots are causally, but not morally, responsible. Neither commanders nor programmers are responsible either, if a weapons system is truly autonomous and evolving. Sparrow’s fear is that we risk creating war crimes without war criminals. Do you share his concern?